The Problem of Immutability

The main problem behind replacing an existing data warehouse with Hadoop is a seemingly innocent concept called immutability. Data in HDFS cannot be changed, they can only be overwritten or appended. This makes Change Data Capture (CDC) and other data warehousing concepts difficult to implement. When Hadoop is used for archival or analytical purposes this is not usually an issue. However, data warehouses alter existing data tables anywhere from daily to multiple times a second.

Workarounds using existing Hadoop tools were invented to address the issue, but they are cumbersome, slow, and unreliable. Many who have attempted these workarounds have concluded that the efforts required are simply not worth the investment.

Previous Attempts

To make a Hadoop cluster based on HDFS and Hive function as a data warehouse, two main approaches evolved: partitioning based on time and/or the “Four-Step Method.”

Time-based partitioning separates the data and stores each month, week, or other time unit of data as a separate file. If changes are detected in a record for the month of July in the originating table, for example, the entire month is overwritten in HDFS. While somewhat effective at keeping data current, this method is needlessly cumbersome. Why overwrite an entire month or week just to update a single record? This is a waste of resources.

The Four-Step Method takes a different, much more complex approach. New data from the originating system are used to create a temporary table, which is then joined to the existing table in a view, which is then used to overwrite the existing data in HDFS at the end of the current time period.

Confused? You’re not alone.

Overcoming Immutability

MapR realized early in the game that immutability would be an issue and created a filesystem that was true read-write. Despite the proprietary nature of MapR-FS it uses the same API as HDFS, which all but negates compatibility issues.

Cloudera recognized the issues that the immutability of data in HDFS caused for their customers, and set to work to develop an alternative that would store data in such a way that would allow updates and changes in an expedient fashion. The result is Kudu, an efficient, updateable file store that acts much like a relational database in everyday use.

Commands like INSERT, UPDATE, and DELETE that are unavailable in HDFS and Hive (or didn’t work like their counterparts in relational databases), now function as expected and scale to previously unimagined sizes of data. Kudu even ups the ease of use by including support for an UPSERT command which chooses whether a record should be updated or inserted based on the primary key. Which brings us to the next point…

Keys to the Kingdom

Another notable difference between Hadoop and relational databases has been the lack of keys in Hive. Keys are essential to the success of Change Data Capture (CDC), the process by which data in the data warehouse are continually updated. Kudu’s support of and requirement for a primary key in each table mean that there is now a mechanism in Hadoop for preventing duplicate data during updates. This was a glaring omission before.

Caveats

It can’t be all sunshine and roses, can it? Since Kudu is a relatively new technology there are limitations that must be considered when using it for data warehousing, some of which include the following:

• Kudu requires primary keys but doesn’t support foreign keys, so linking between tables in the way a relational database would is not yet possible.

• As of version 1.3, Kudu doesn’t support creating tables from files or the LOAD DATA INFILE command. The fastest way to bulk load data is to bring them into HDFS, create a Hive table over them, and then create the Kudu table through a CREATE AS SELECT statement. Another alternative is to interface directly with the API, bypassing ODBC or JDBC drivers. The API works with C++, Java, and now even includes bindings for Python.

• The number of supported data types for columns in Kudu is relatively small and does not include either DATE or TIMESTAMP.

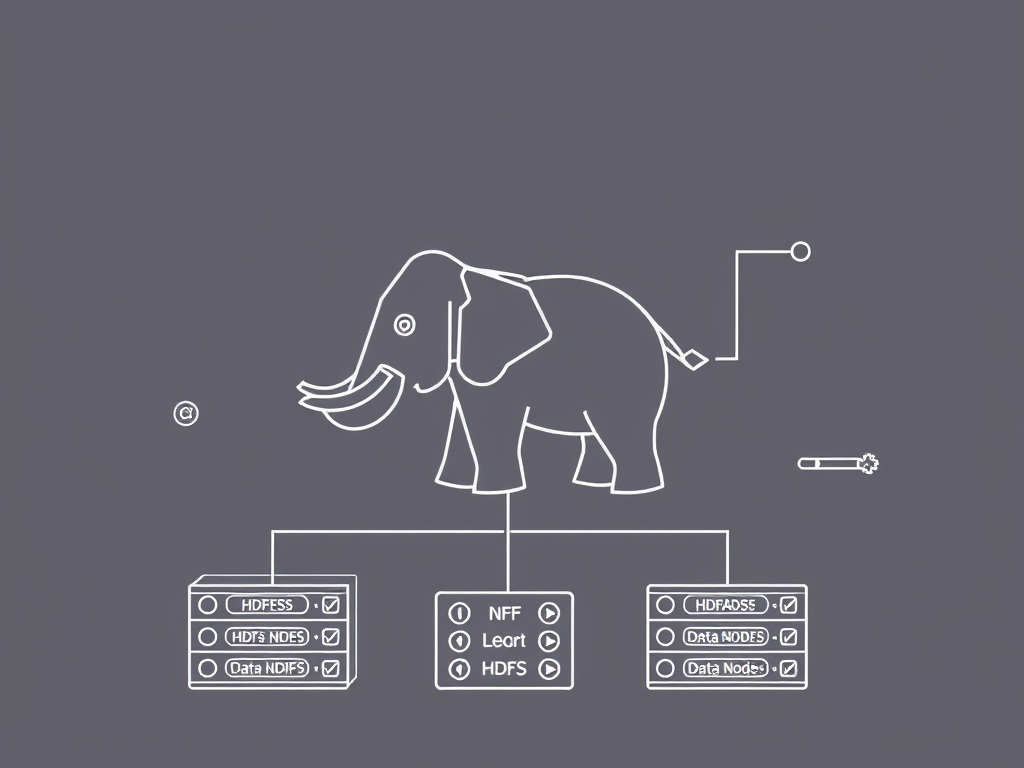

• Supporting a Hadoop data warehouse can be more challenging than with traditional solutions. Hadoop is an ecosystem of components all working together.

Now, Why Would I Do This?

Now that immutability isn’t the limiting issue it was, the question remains: why use a Hadoop data warehouse? This usually boils down to one of two things: price and/or scale. Some switchers cite excessive licensing costs for traditional data warehouses and limitations of the hardware used in those solutions. Hadoop also easily scales past petabytes of data and does so inexpensively.