Ever tried building a chatbot that seemed brilliant in demos but completely fell apart when real users started asking it questions? The gap between “cool AI prototype” and “actually useful enterprise application” is where dreams go to die, and where Databricks Mosaic AI Agent Framework comes to the rescue.

(BTW, if you’re working with Databricks and want to understand more about data pipeline architecture, check out my guide on Delta Live Tables.)

What is Mosaic AI Agent Framework?

Think of Mosaic AI Agent Framework as Databricks’ answer to the question: “How do we build RAG (Retrieval-Augmented Generation) systems that actually work in production?” It’s not just another LLM wrapper – it’s a complete framework for building, evaluating, and deploying AI agents that can reason over your company’s data.

The framework handles all the annoying parts: connecting to your data sources, managing embeddings, evaluating quality, tracking versions, and deploying to production. You focus on the business logic, and Mosaic handles the infrastructure.

Why RAG Systems Fail (And How Mosaic Fixes It)

Let’s be honest about why most RAG implementations suck:

Problem 1: Garbage Retrieval

Your system retrieves completely irrelevant chunks because you didn’t tune your chunking strategy, embeddings are mediocre, or your search algorithm is too simplistic.

Mosaic’s Solution: Built-in support for multiple retrieval strategies, automatic chunking optimization, and vector search through Delta Lake with quality metrics to show you what’s actually being retrieved.

Problem 2: No Evaluation Framework

You have no idea if your RAG is getting better or worse as you make changes. You’re flying blind, relying on vibes and cherry-picked examples.

Mosaic’s Solution: Built-in evaluation framework with MLflow integration. Track metrics like retrieval precision, answer quality, and hallucination rates across versions.

Problem 3: Production Deployment is a Nightmare

Getting from Jupyter notebook to production requires rebuilding everything with proper error handling, monitoring, and scaling.

Mosaic’s Solution: Deploy as a REST endpoint with one command. Includes monitoring, logging, and governance out of the box.

Building Your First Agent

Let’s build a simple RAG agent that can answer questions about your company’s documentation. I’ll show you the actual code, not just theory.

Step 1: Prepare Your Data

First, get your documents into Delta Lake. This could be PDFs, markdown files, web pages, whatever:

from databricks.vector_search.client import VectorSearchClient

from pyspark.sql import SparkSession

# Load your documents

df = spark.read.format("text").load("/path/to/docs/")

# Add some metadata

df = df.withColumn("doc_id", monotonically_increasing_id())

df = df.withColumn("source", input_file_name())

# Write to Delta Lake

df.write.format("delta").mode("overwrite").saveAsTable("company_docs")Step 2: Create Vector Search Index

Now create a vector search index on your documents:

from databricks.vector_search.client import VectorSearchClient

vsc = VectorSearchClient()

# Create the index

vsc.create_delta_sync_index(

endpoint_name="company_docs_endpoint",

index_name="company_docs_index",

source_table_name="company_docs",

pipeline_type="TRIGGERED",

primary_key="doc_id",

embedding_source_column="value",

embedding_model_endpoint_name="databricks-bge-large-en"

)Databricks automatically chunks your documents, generates embeddings, and keeps everything in sync as your source data changes. No manual pipeline maintenance.

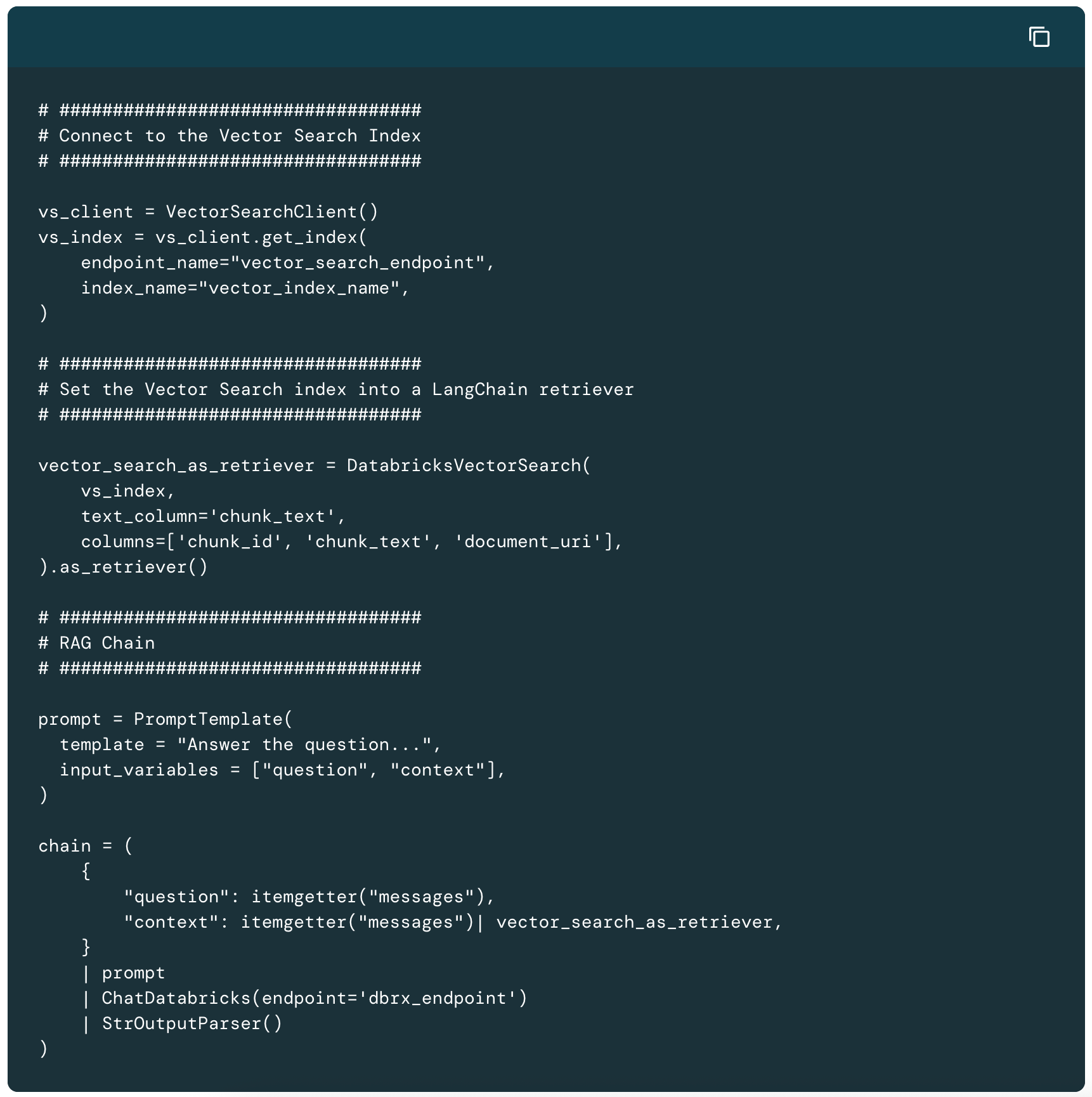

Step 3: Define Your Agent

Now for the fun part – creating the actual agent:

from databricks.agents import Agent

from databricks.agents.retrieval import VectorSearchRetriever

# Define retrieval component

retriever = VectorSearchRetriever(

index_name="company_docs_index",

endpoint_name="company_docs_endpoint",

columns=["value", "source"],

num_results=5

)

# Create the agent

agent = Agent(

name="company_docs_assistant",

retriever=retriever,

llm_endpoint="databricks-llama-2-70b-chat",

system_prompt="""You are a helpful assistant that answers questions about company documentation.

Use the provided context to answer questions accurately.

If you don't know the answer, say so - don't make things up.

Always cite which document you got information from."""

)Step 4: Test It Out

Before deploying, let’s see if this thing actually works:

# Ask a question

response = agent.query("What is our vacation policy?")

print("Answer:", response.answer)

print("Sources:", response.sources)

print("Retrieved chunks:", response.retrieved_chunks)The response includes not just the answer, but what was retrieved and why. This transparency is crucial for debugging.

Evaluation: Actually Measuring Quality

Here’s where Mosaic really shines. Most people build RAG systems and have no idea if they’re any good. Let’s fix that:

from databricks.agents.evaluation import evaluate

# Create evaluation dataset

eval_data = [

{

"question": "What is our vacation policy?",

"expected_answer": "Employees get 15 days PTO per year",

"expected_sources": ["hr_handbook.pdf"]

},

{

"question": "How do I submit an expense report?",

"expected_answer": "Use the Concur system",

"expected_sources": ["finance_procedures.pdf"]

},

# Add more examples...

]

# Run evaluation

results = evaluate(

agent=agent,

evaluation_data=eval_data,

metrics=["answer_correctness", "retrieval_precision", "hallucination_rate"]

)

# View results

print(results.summary())This gives you actual metrics. Not feelings, not demos that work perfectly, but real numbers you can track over time.

Advanced Features You’ll Want

Multi-Turn Conversations

Real users don’t ask single questions – they have conversations:

# Start a conversation

session = agent.create_session()

# Multiple turns

response1 = session.query("What is our vacation policy?")

response2 = session.query("How do I request time off?") # Uses context from previous question

response3 = session.query("What about sick days?") # Builds on the conversationCustom Tools and Function Calling

Your agent can do more than just retrieve documents. Give it tools:

from databricks.agents.tools import Tool

def check_pto_balance(employee_id: str) -> dict:

"""Check remaining PTO balance for an employee"""

# Query your HR system

return {"remaining_days": 12, "total_days": 15}

# Add tool to agent

agent.add_tool(

Tool(

name="check_pto_balance",

function=check_pto_balance,

description="Check an employee's PTO balance"

)

)

# Now the agent can use this

response = agent.query("How many vacation days do I have left?") # Will call the toolGuardrails and Safety

Production agents need safety rails:

agent.add_guardrails(

# Don't answer questions outside scope

scope_check=True,

# Filter toxic content

content_safety=True,

# Don't leak sensitive data

pii_detection=True,

# Rate limiting

max_queries_per_user=100,

# Require citations

require_sources=True

)Deployment to Production

Once you’re happy with evaluation results, deployment is straightforward:

# Log to MLflow

import mlflow

with mlflow.start_run():

mlflow.log_params(agent.get_config())

mlflow.log_metrics(results.metrics)

model_info = mlflow.pyfunc.log_model(

artifact_path="agent",

python_model=agent

)

# Deploy as endpoint

from databricks import serving

serving.create_endpoint(

name="company_docs_assistant",

model_uri=model_info.model_uri,

workload_size="Small",

scale_to_zero_enabled=True

)You now have a REST API endpoint with automatic scaling, monitoring, and governance. Query it like any other API:

import requests

response = requests.post(

"https://your-workspace.databricks.com/serving-endpoints/company_docs_assistant/invocations",

headers={"Authorization": f"Bearer {token}"},

json={"query": "What is our vacation policy?"}

)

print(response.json())Monitoring and Iteration

Production is just the beginning. Monitor how users actually interact with your agent:

# View query logs

logs = agent.get_query_logs(

start_date="2024-01-01",

end_date="2024-01-31"

)

# Find problematic queries

problematic = logs[logs['user_feedback'] == 'negative']

# Analyze retrieval quality

retrieval_metrics = agent.analyze_retrieval(

queries=logs['query'].tolist()

)

print("Average retrieval precision:", retrieval_metrics['precision'].mean())

print("Queries with low retrieval:", retrieval_metrics[retrieval_metrics['precision'] < 0.5])Use these insights to improve your agent. Maybe you need better chunking, different embeddings, or to expand your document set.

Real-World Tips

Start Small, Expand Gradually

Don’t try to index your entire data lake on day one. Start with one use case, one document set. Get that working really well, then expand.

Invest in Evaluation Data

Build a good evaluation dataset from day one. Every time users report a problem, add it to your eval set. This becomes your regression test suite.

Monitor Token Usage

LLM calls cost money. Track your token usage and optimize. Maybe you don’t need to retrieve 10 chunks, maybe 3 is enough. Maybe you can use a smaller model for some queries.

Version Everything

Use MLflow to version your agents, prompts, and retrieval strategies. You’ll want to roll back when experiments go wrong.

Tune Your Chunking Strategy

Default chunking (512 tokens) isn’t always optimal. Experiment with chunk sizes and overlap based on your document structure.

Common Pitfalls

Pitfall 1: Ignoring Data Quality

Garbage in, garbage out. If your source documents are poorly formatted, outdated, or contradictory, your agent will be too.

Pitfall 2: Over-Engineering

Don’t build a complex multi-agent system when a simple RAG agent would work. Start simple, add complexity only when needed.

Pitfall 3: No Human Feedback Loop

Build in ways for users to flag bad answers. This feedback is gold for improving your system.

Pitfall 4: Forgetting About Latency

Users won’t wait 10 seconds for an answer. Optimize your retrieval, use smaller models where appropriate, and cache common queries.

The Bottom Line

Databricks Mosaic AI Agent Framework isn’t just another LLM wrapper – it’s a complete production system for building RAG applications that actually work. The framework handles the infrastructure, monitoring, and deployment headaches, letting you focus on building agents that solve real problems.

The key differentiator is the tight integration with the Databricks platform. Your data is already in Delta Lake, your pipelines are in Databricks, your ML models are tracked in MLflow. Mosaic lets you build AI agents that naturally fit into this ecosystem without duct-taping together different services.

Start small, evaluate rigorously, iterate based on real user feedback, and you’ll end up with agents that actually deliver value instead of just impressive demos. The framework gives you the tools – now go build something useful.