After spending several months using Snowflake Snowpark, I’m really impressed with how it enhances the data engineering and data science experience within the Snowflake ecosystem. Essentially, Snowpark allows you to write and execute code directly inside Snowflake using languages like Python, Scala, and Java. This eliminates the need for external processing engines, which reduces complexity and accelerates data workflows. It’s particularly useful for those who want to integrate their custom data processing logic, machine learning models, or complex transformations into Snowflake without needing to manage additional infrastructure.

How Does It Work?

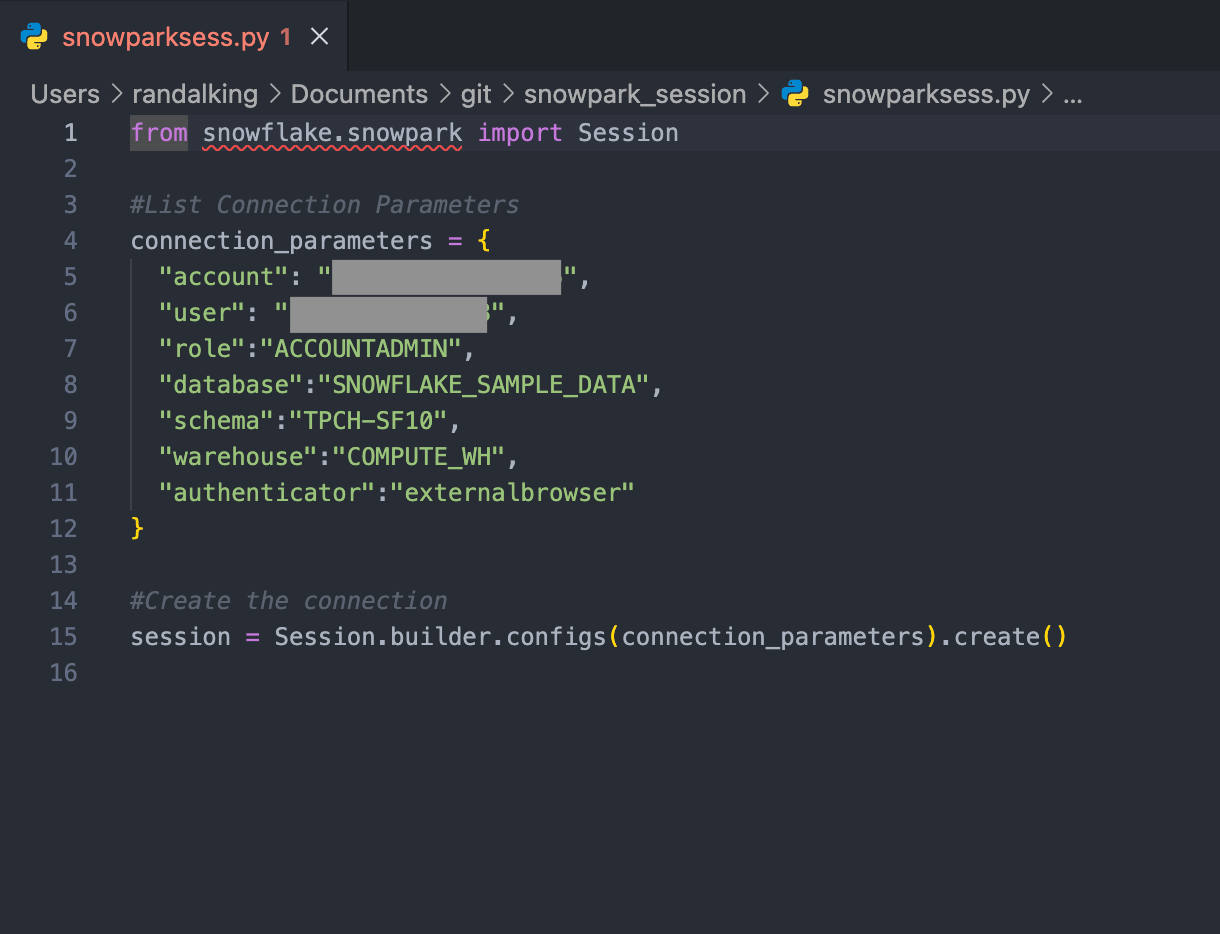

At its core, Snowpark provides a programming framework that allows developers to build and execute data-intensive applications directly on Snowflake. The main idea is that the data doesn’t need to leave Snowflake for processing. When you write Snowpark code, it is compiled and executed as native SQL behind the scenes, ensuring that performance optimizations like parallelization and efficient resource allocation are handled by Snowflake’s powerful cloud infrastructure. It’s essentially turning Snowflake into a fully integrated execution engine for your code—whether you’re building predictive models, running complex transformations, or managing ETL workflows. To better understand how Snowflake’s underlying infrastructure handles this, check out my deep dive on Snowflake’s architecture.

For example, with Snowpark, you can define user-defined functions (UDFs) or stored procedures that run within the Snowflake environment. These UDFs can process data in Python or Scala, but they are executed as native SQL operations on Snowflake’s compute clusters, meaning you get the scalability and performance benefits of Snowflake’s architecture while writing code in your preferred language. This tight integration between code execution and storage gives you the flexibility of a programming environment (like Python’s pandas) with the performance of a distributed cloud data warehouse.

Dataframes? Kinda.

Another powerful feature is how Snowpark handles dataframe-like operations. For example, in Python, you can use Snowpark’s DataFrame API to manipulate and transform data in a manner similar to pandas, but the heavy lifting is done within Snowflake’s compute resources. This is an attractive feature for data scientists and analysts who are already familiar with pandas but need the scalability and performance of Snowflake for larger datasets. Snowpark DataFrames are automatically optimized, so you can perform complex queries and transformations on large datasets without worrying about manually optimizing SQL queries. Speaking of optimization, if you want to dig deeper into query performance, I’ve got some practical tips in my guide on Snowflake query optimization.

Pros and Cons

The ability to use Snowflake’s native query optimization engine means that Snowpark queries run efficiently and scale automatically without much additional effort from the user. This is key when you’re dealing with data pipelines or real-time data processing.

That being said, there are a couple of areas that could still use improvement. One issue I’ve run into is that while Snowpark does a good job of abstracting the complexity of Snowflake’s architecture, it can be difficult to troubleshoot certain performance bottlenecks. The error messages in some cases are vague, and deeper insights into query execution (like in SQL-based workflows) are harder to come by when dealing with complex transformations. More detailed debugging tools would be a real asset. Additionally, while Snowpark handles most workloads very well, processing extremely large datasets (especially when doing custom, intensive transformations like NLP or deep learning model training) can sometimes result in slower execution times compared to what you might expect from specialized data processing frameworks.

Conclusion

Overall, Snowpark is a fantastic tool for teams that are already in the Snowflake ecosystem. It allows you to streamline your data pipelines, minimize the need for additional infrastructure, and perform more complex tasks like machine learning and advanced data transformation right within Snowflake. If you’re curious about Snowflake’s AI capabilities beyond just data processing, you might want to explore Snowflake Cortex AI, which brings machine learning and generative AI directly into the platform. With a bit more refinement in error handling and performance optimization for ultra-large datasets, I think it’ll only get better.